Time has passed, so inevitably we have said goodbye to some and hello to others on the Research Data Support team. Amongst other changes, all of us are now based together in Library & University Collections – organisationally, that is, while remaining located in Argyle House with the rest of the Research Data Service providers such as IT Infrastructure. (For an interview with the newest team member there, David Fergusson, Head of Research Services, see this month’s issue of BITS.)

So two teams have come together under Research Data Support as part of Library Research Support, headed by Dominic Tate in L&UC. Those of us leaving EDINA and Data Library look back on a rich legacy dating back to the early 1980s when the Data Library was set up as a specialist function within computing services. We are happy to become ‘mainstreamed’ within the Library going forward, as research data support becomes an essential function of academic librarianship all over the world*. Of course we will continue to collaborate with EDINA for software engineering requirements and new projects.

Introducing –

Jennifer Daub has worked in a range of research roles, from lab-based parasite genomics at the University of Edinburgh to bioinformatics at the Wellcome Trust Sanger Institute. Prior to joining the team, Jennifer provided data management support to users of clinical trials management software across the UK and is experienced managing sensitive data.

Jennifer Daub has worked in a range of research roles, from lab-based parasite genomics at the University of Edinburgh to bioinformatics at the Wellcome Trust Sanger Institute. Prior to joining the team, Jennifer provided data management support to users of clinical trials management software across the UK and is experienced managing sensitive data.

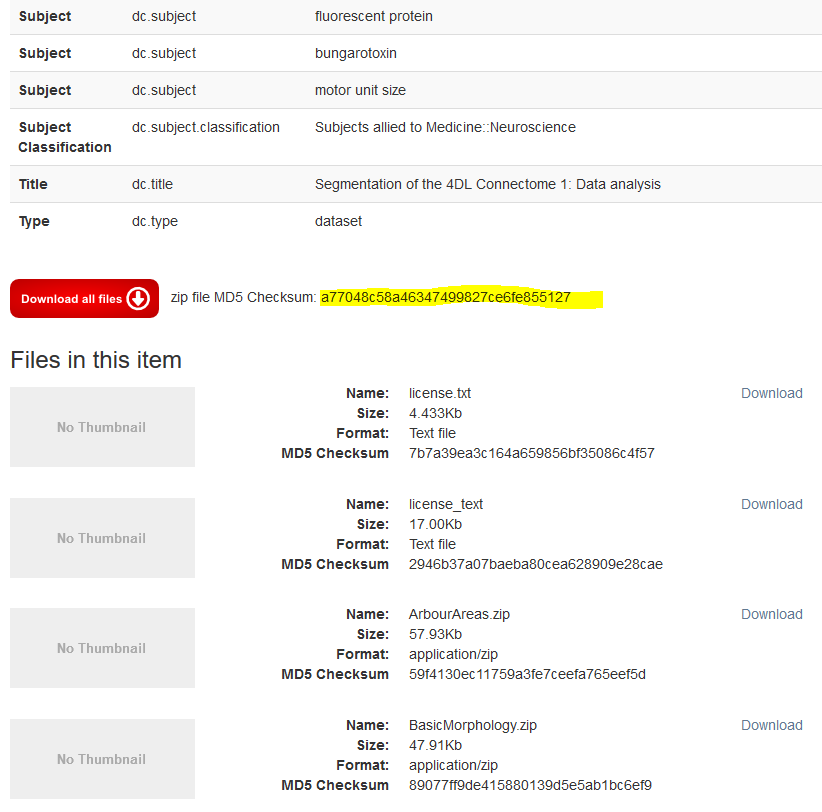

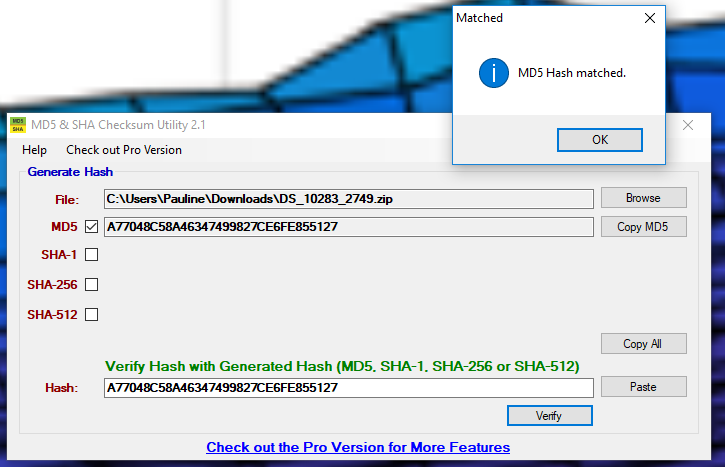

As Research Data Service Assistant, Jennifer has joined veterans Pauline Ward and Bob Sanders in assisting users with DataShare and Data Library as well as the newer DataVault and Data Safe Haven functions, and additionally providing general support and training along with the rest of the team.

Catherine Clarissa is doing her PhD in Nursing Studies at the University of Edinburgh. Her study is looking at patients’ and staff experiences of early mobilisation during the course of mechanical ventilation in an Intensive Care Unit. She has good knowledge of good practice in Research Data Management that has been expanded by taking training from the University and by developing a Data Management Plan for her own research.

Catherine Clarissa is doing her PhD in Nursing Studies at the University of Edinburgh. Her study is looking at patients’ and staff experiences of early mobilisation during the course of mechanical ventilation in an Intensive Care Unit. She has good knowledge of good practice in Research Data Management that has been expanded by taking training from the University and by developing a Data Management Plan for her own research.

As Project Officer she is working closely with project manager Pauline Ward on the Video Case Studies project, funded by the IS Innovation Fund over the next few months. We have invited her to post to the blog about the project soon!

Last but not least, Martin Donnelly will be joining us from the Digital Curation Centre, where he has spent the last decade helping research institutions raise their data management capabilities via a mixture of paid consultancy and pro bono assistance. He has a longstanding involvement in data management planning and policy, and interests in training, advocacy, holistic approaches to managing research outputs, and arts and humanities data.

Before joining Edinburgh in 2008, Martin worked at the University of Glasgow, where he was involved in European cultural heritage and digital preservation projects, and the pre-merger Edinburgh College of Art where he coordinated quality and accreditation processes. He has acted as an expert reviewer for European Commission data management plans on multiple occasions, and is a Fellow of the Software Sustainability Institute.

We look forward to Martin joining the team next month, where he will take responsibility as Research Data Support Manager, providing expertise and line management support to the team as well as senior level support to the service owner, Robin Rice, and to the Data Safe Haven Manager, Cuna Ekmekcioglu – who recently shifted her role from lead on training and outreach. Kerry Miller, Research Data Support Officer, is actively picking up her duties and making new contacts throughout the university to find new avenues for the team’s outreach and training delivery.

*The past and present rise of data librarianship within academic libraries is traced in the first chapter of The Data Librarian’s Handbook, by Robin Rice and John Southall.

Robin Rice

Data Librarian and Head, Research Data Support

Library & University Collections

Jennifer Daub has worked in a range of research roles, from lab-based parasite genomics at the University of Edinburgh to bioinformatics at the Wellcome Trust Sanger Institute. Prior to joining the team, Jennifer provided data management support to users of clinical trials management software across the UK and is experienced managing sensitive data.

Jennifer Daub has worked in a range of research roles, from lab-based parasite genomics at the University of Edinburgh to bioinformatics at the Wellcome Trust Sanger Institute. Prior to joining the team, Jennifer provided data management support to users of clinical trials management software across the UK and is experienced managing sensitive data. Catherine Clarissa is doing her PhD in Nursing Studies at the University of Edinburgh. Her study is looking at patients’ and staff experiences of early mobilisation during the course of mechanical ventilation in an Intensive Care Unit. She has good knowledge of good practice in Research Data Management that has been expanded by taking training from the University and by developing a Data Management Plan for her own research.

Catherine Clarissa is doing her PhD in Nursing Studies at the University of Edinburgh. Her study is looking at patients’ and staff experiences of early mobilisation during the course of mechanical ventilation in an Intensive Care Unit. She has good knowledge of good practice in Research Data Management that has been expanded by taking training from the University and by developing a Data Management Plan for her own research.