Hello, my name is Keith Munro and on March 4th 2024 I began my new role as a Research Data Support Assistant. Immediately prior to joining the Research Data Service (RDS), I studied for a PhD in Computer and Information Science at the University of Strathclyde. My thesis studied the information behaviour of hikers on the West Highland Way, see below for a photo of me during data gathering, with a particular focus on embodied information that walkers encountered, the classification of information behaviour in situ and well-being benefits resulting from the activity. I was lucky to present at the Information Seeking In Context conference in Berlin in 2022 and I am still working on getting a number of the findings from my thesis published in the months ahead. I passed my viva on Feb 2nd, so the timing of starting this job has been excellent.

Before my PhD, I studied for a MSc in Information and Library Studies, also from the University of Strathclyde, so there was always a plan to work in the library and information sector, but as my Masters degree was finishing during the outbreak of the Covid-19 pandemic in Spring/Summer 2020, I decided to take an interesting diversion, the scenic route, if you will, with the PhD! My Masters thesis was on the information behaviour of DJ’s, motivated by my own, lucky to do it but not exactly high-profile, experience as a DJ. From this, I was very fortunate to win the International Association of Music Librarians (UK & Ireland branch) E.T. Bryant Memorial Prize, awarded for a significant contribution to the literature in the field of music information. Subsequently, findings from this have been published in the Journal of Documentation and Brio.

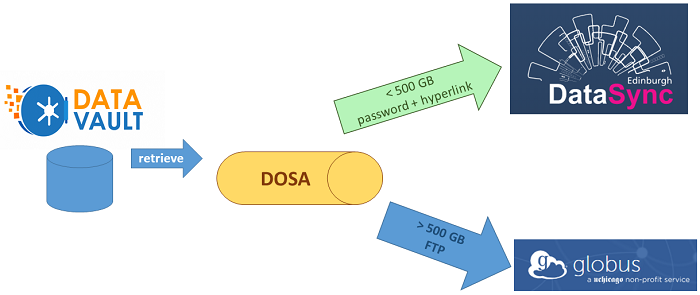

Since starting my new role I have been greatly impressed by the team I have joined, who all bring a wealth of experience from across the academic spectrum and have also been very warm in welcoming me and in sharing knowledge. I hope I can bring my study and research experience to complement what the RDS team is doing and I am excited to be learning more about research data management. The size of the University of Edinburgh can be daunting and learning all the acronyms will take some time I suspect, but the range of research I have already encountered in reviewing submissions to DataShare has been fascinating, including Martian rock impacts and horse knees, something I’m sure will continue to be the case!