In this blog Dr Eleni Kotoula, Lead Research Facilitator at the University of Edinburgh, writes about the CERSE and their most recent event.

What is CERSE?

CERSE is a community like no other! It offers an excellent opportunity for Research Software Engineers (RSE) to get support and recognition for their work. In addition to Research Software Engineers, the CERSE welcomes those interested in the development, use, support or management of research software. Hence, researchers, research support and research data professionals can get involved, expand their network and broaden their understanding of research software engineering. To find out more, have a look at the CERSE Meeting Handbook.

A summary of CERSE’s 7th meeting

Members of the CERSE community across Edinburgh came together earlier this month in the Bayes Centre for the first post-pandemic meeting. After a long break from activities, the organisers from the University of Edinburgh Digital Research Services, EPCC, Sofware Sustainability Institute and the Centre of Data, Culture and Society were keen to resurrect meetings.

Mario Antonioletti opened the meeting, briefly referring to the RSE movement and its previous meetings in Edinburgh. Mike Wallis, Research Services Lead at the University of Edinburgh, gave an overview of the Edinburgh Compute and Data Facility, highlighting data storage, cloud and high performance computing services. Andrew Horne provided an update on EDINA’s ongoing project for the development of Automatic Systematic Reviews. Then, Mario Antonioletti presented EPCC and services such as Archer2 and Cirrus, as well as the important work of the Software Sustainability Institute. After the short talks, Felicity Anderson, PhD candidate in Informatics and Software Sustainability Institute Fellow, led an ice-breaking activity, followed by a networking session. All presentations are available here.

Next steps

The CERSE community has the potential to grow and flourish in a region so rich in research-intensive institutions and academic excellence. We aim to continue by alternating face-to-face and virtual meetings monthly. To do so, we need active participation from those interested in the RSE community. There are different ways to get involved; attending meetings, talking about your relevant work or volunteering to help organize one of the following meetings. For us in Digital Research Facilitation, CERSE offers the opportunity to meet and connect with researchers, RSEs, IT and research support staff. Moreover, we share the same passion for best practices in data-intensive and computational research. That’s why we have been heavily involved in supporting this community in practice and strongly encourage those interested to join us. We are looking forward to meeting you in one of the following CERSE meetings, either in person or online.

How to get involved?

Join the Joint Information Systems Committee (JISC) mailing list: http://www.jiscmail.ac.uk/ed-rse-community

Follow the CERSE on Twitter: https://twitter.com/cerse7

Join the RSE: https://society-rse.org/join-us/

Dr Eleni Kotoula

Digital Research Facilitation

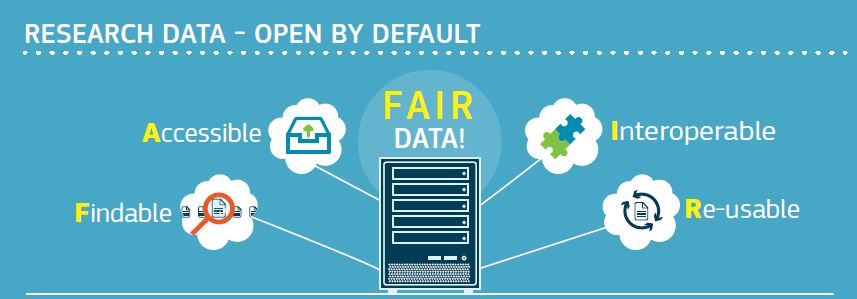

Thirdly,what services are provided to researchers to make their work public? Most universities provide support like a data repository (except for LSE), Research Data Management support, Open Access to publications and thesis and guidance on sharing research software. A few provide support on protocols sharing. Some universities have started hosting an open research conference. For example, UCL Open Science Conference 2021, 2022,

Thirdly,what services are provided to researchers to make their work public? Most universities provide support like a data repository (except for LSE), Research Data Management support, Open Access to publications and thesis and guidance on sharing research software. A few provide support on protocols sharing. Some universities have started hosting an open research conference. For example, UCL Open Science Conference 2021, 2022,

From the Arts

From the Arts