The Data Vault project is a collaboration between the University of Edinburgh and the University of Manchester. The majority of the funding from the Data Spring programme has been allocated to paying for software development effort at both partners, along with a small proportion to pay for travel costs.

The intention of the three month project is create a proof of concept Data Vault system. Months one and two perfoemd the scoping, use case, requirements, and design phases. Month three will spent developing the software.

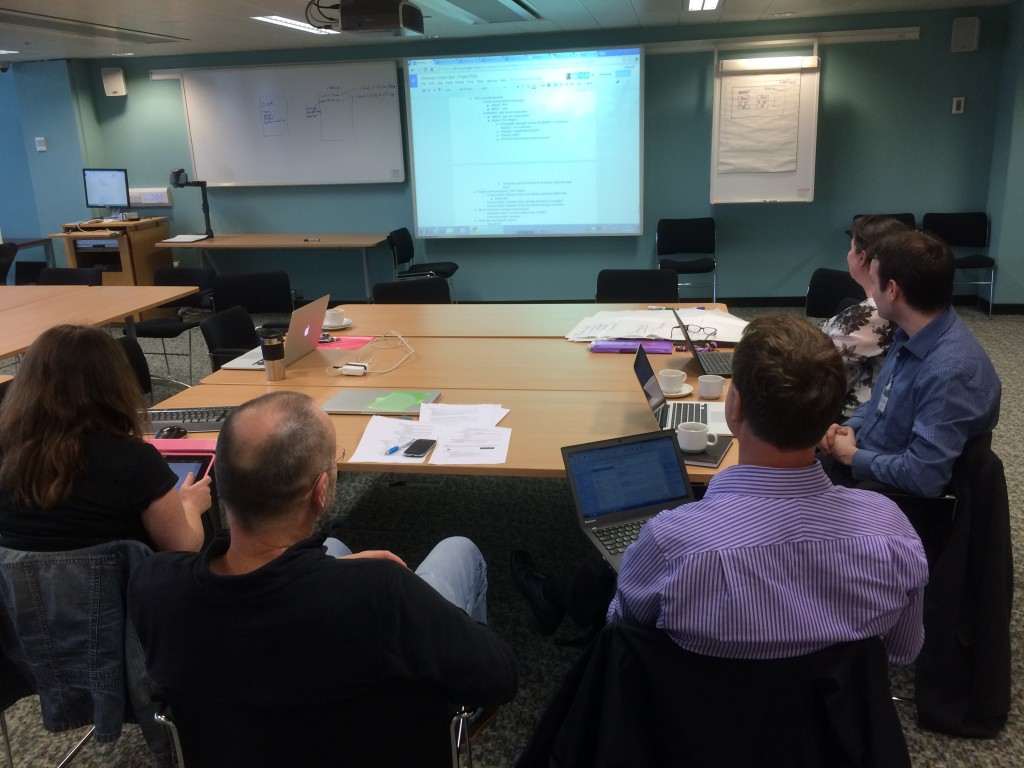

Three of us (Robin, Claire, Stuart) are due to travel to Manchester University next week to start writing the code with their project staff (Tom and Mary). We’re taking the approach of a ‘hackathon – all of us coding collaboratively together! This should allow rapid development as communication will be easy.