This is a guest blog post written by Veronica Cano, Open Data and REF Manager

The CAHSS Research Cultures team organised the half-day event “AI, Openness & Publishing Futures”, which took place at Edinburgh Futures Institute on the 13th November. Following our last half-day event earlier in 2025, “Open research issues and prospects in the Arts, Humanities and Social Science”, the focus shifted towards exploring the dynamic interplay between AI, open research, and the publishing industries. The event featured Dr. Ben Williamson, Dr. Lisa Otty and Dr. Andrea Kocsis, who each deliberated on how AI is reshaping research practices and publishing.

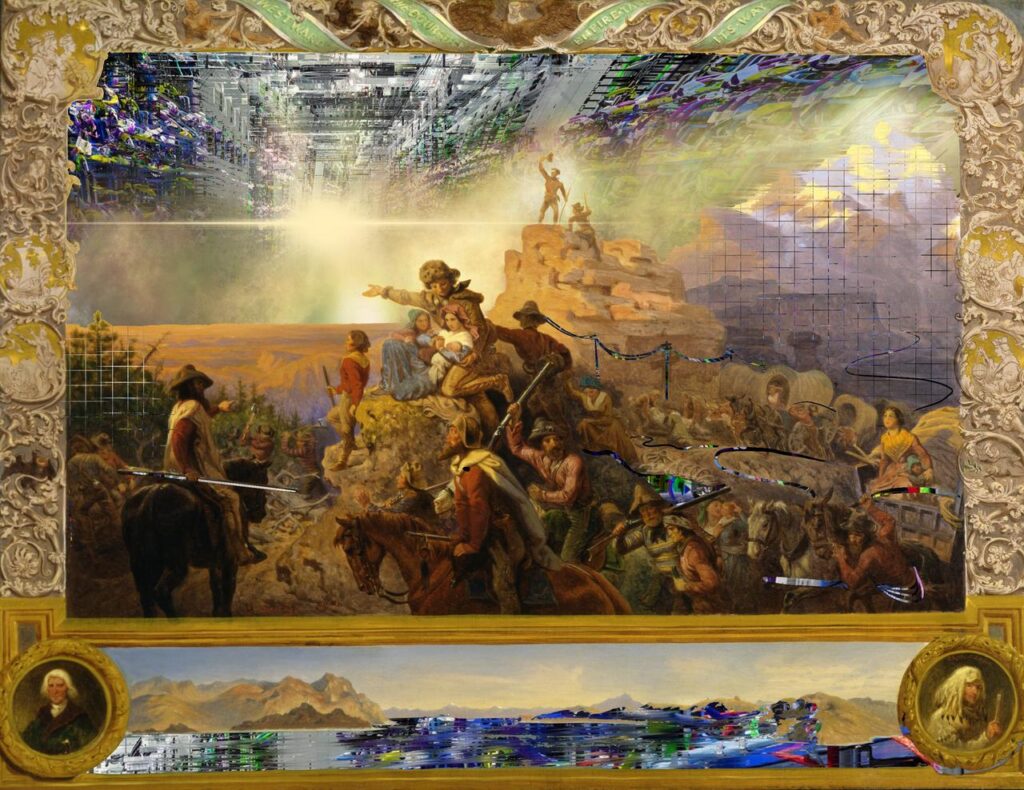

Highlighting risks of new forms of colonisation in the digital realm, this image was shared by Dr Otty as part of her presentationHanna Barakat & Archival Images of AI + AIxDESIGN / https://betterimagesofai.org / https://creativecommons.org/licenses/by/4.0/

Critical Evaluation of Academic Content Commercialization

Dr. Ben Williamson shed light on the commercial motives of publishers and technology giants in harnessing AI for processing academic content. He drew on his recent work with Janja Komljenovic to argue that emerging publishing practices transform scholarly work into data assets, leveraging AI to maximise profits, often at the expense of academic integrity and control over research outputs. Referencing the work of Mirowski, he linked these developments to wider moves around commercialised platform science. Sharing his experiences as a journal editor, Ben highlighted instances where significant journal archives, like those from Taylor and Francis, were sold to AI companies, often without much transparency, underscoring a concerning trend toward the privatisation of academic knowledge and raising questions about the impact of this on open research and publishing.

Balancing Sustainability with Open Research Practices

Dr. Lisa Otty provided an analysis of sustainable AI use, noting the environmental impact associated with the growing computational demands of AI systems. She highlighted that while AI offers substantial benefits like efficiency in research and accessibility, it also comes with significant energy and carbon footprints. She suggested practical strategies such as using smaller, more efficient AI models and engaging in sustainable software engineering practices to mitigate the eco-impact of digital research tools. Making the most of the benefits of AI requires careful judgement about what is worth using ‘maximal computing’ for, and where more sustainable, possibly smaller-scale practices are appropriate and sufficient. More information about this is available on the Digital Humanities Climate Coalition web site: https://sas-dhrh.github.io/dhcc-toolkit/index.html.

Emphasising Open GLAM Data and AI Integration

Dr. Andrea Kocsis highlighted the longstanding engagement of AI within GLAM (Galleries, Libraries, Archives, Museums) sectors. Her presentation provided a historical timeline showing the evolution of AI technologies in these institutions, noting significant shifts towards more advanced machine learning and generative AI systems in recent years. Reflecting on the work being done at National Library of Scotland (NLS), including their advocacy for open data to foster research and innovation while ensuring ethical compliance and data stewardship, Andrea emphasized the necessity of responsible, open-data practices to mitigate risks such as bias and loss of metadata context which can accompany AI integration. Ongoing projects at NLS highlight both the promise of responsible AI in the GLAM sector and the creative possibilities unlocked by open data, exemplified by Andrea’s Digital Ghosts exhibition and its innovative use of web-archive material.

Community Response and Forward Thinking

The event moved on to a group discussion framed by extracts from blogs, reports and press articles on different issues regarding AI and publishing. The texts sparked thoughtful responses from the audience, generating insights on how the monetisation and privatisation of research is facilitated by AI and raising questions on what the open research community should do in the face of the risks posed by AI. Researchers’ pressure to publish frequently has become a playing ground for AI outcomes, resulting in unethical practices like papermills. The impacts are many, the erosion of public trust in research being a main one.

One of the attendees reflected afterwards: “… as it related to publishing, I got the impression that there was a sense of resignation, that it is too late, because the articles have already been sold and in many ways, we cannot opt out from AI (the google/bing summaries when you look something up, suggestions in Word, etc.) in our workplace, but also in our personal lives… Perhaps giving researchers advice on what individual action they can take, while showing what the sector is advocating for would be helpful.”

The role of higher education not only in grappling with current realities but in shaping future practices through individual and collective action was seen as extremely important, and conversations included how students can be engaged with these issues. Participants highlighted a need for ongoing dialogue and adaptive strategies as the landscapes of AI, open research, and publishing continue to evolve rapidly.