Since April I have been an intern with the University of Edinburgh’s Cultural Heritage Digitisation Service (CHDS) and the Centre for Data, Culture and Society (CDCS), looking into text extraction processes at the University, both in library practice and thinking about how this is taught within digital scholarship. Throughout the internship I have had the opportunity to do both independent research and discussions with staff across the Library and University Collections (L&UC) to get a more in-depth understanding of text recognition processes.

Since April I have been an intern with the University of Edinburgh’s Cultural Heritage Digitisation Service (CHDS) and the Centre for Data, Culture and Society (CDCS), looking into text extraction processes at the University, both in library practice and thinking about how this is taught within digital scholarship. Throughout the internship I have had the opportunity to do both independent research and discussions with staff across the Library and University Collections (L&UC) to get a more in-depth understanding of text recognition processes.

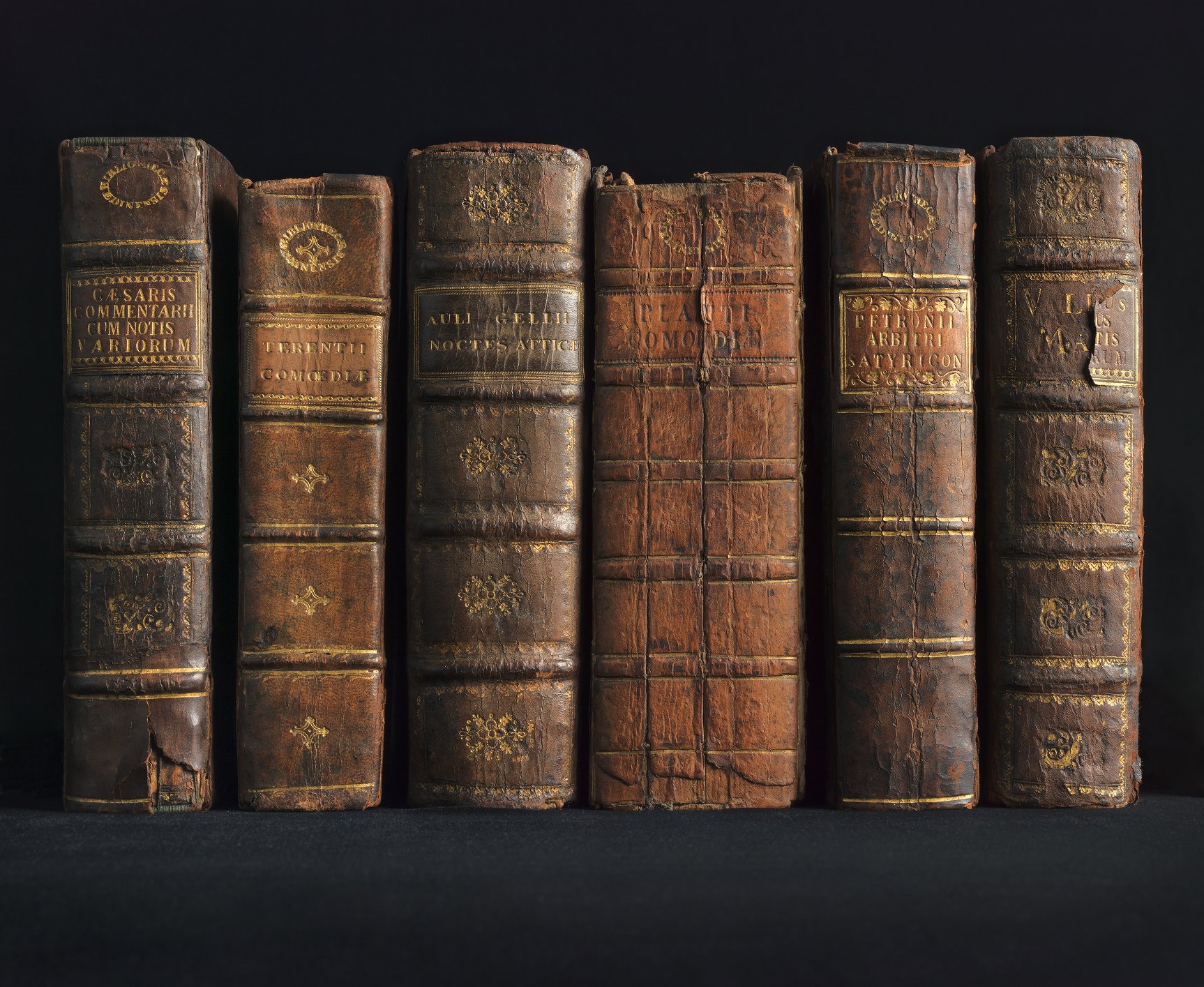

Text extraction is commonly done through a process called Optical Character Recognition (OCR), where an image of text is scanned and the software recognises the writing and creates a text output. In older technology this was done on a character-by-character level, but now many OCR engines incorporate deep learning and natural language processing models in their approaches for optimised and more advanced text recognition. This is valuable for cultural heritage as it provides wider access to items in the collection. The generated text can be presented in a range of ways such as searchable pdfs, downloadable text datasets, or side-by-side displays of images of documents and the text. OCR technology has been widely used by libraries and archives for the past twenty years and the technology has been developing to produce higher quality text with fewer errors. OCR has reasonably good success rates with good quality printed texts due to the standardisation of printed texts. Due to this, new technologies have been developed to target handwritten text recognition. For more general information on text extraction processes, see the section on text extraction in the CDCS Training Pathway ‘Managing Digitised Documents’.

In the past, L&UC have used various software and approaches for different projects, dependent on the materials and resources available. My task has been evaluating these approaches, looking at what other academic and research libraries have been using and making recommendations for the best options moving forward. Many printed books are available as searchable PDFs through the Library and University Collections’ Open Books site, relying on a text underlay to the PDF created with OCR with ABBYY FineReader. ABBYY FineReader is commonly used in cultural heritage institutions as its OCR is easily automated into digitisation process and it provides a reasonably good level of text recognition, although there are still errors as OCR is never 100% accurate.

My research identified ABBYY FineReader as one of the more reliable automated paid services, able to process large volumes of text within the digitisation process, in several languages, and with a reasonable degree of accuracy on some materials. However, there are other viable options out there, including the open-source OCR engine Tesseract. Tesseract requires a more hands-on approach and understanding of more advanced programming than automated options, however, but can provide a greater flexibility and has been found to perform well on ranges of materials (Olson & Berry, 2021). Resources such as staff hours, skill levels and training, as well as departmental and project budgets are key factors in determining which text extraction software should be used. The way the text output will be stored and presented by L&UC are also important considerations as we need to be able to support the use of the text documents created, whether this is through searchable PDFs, text and image presentation alongside each other, or other options.

Due to the versatility of materials and projects that CHDS covers, currently there is not necessarily a one-size fits all option that will cover all bases for text recognition, however the technology is developing all the time, with more options available to cover even more languages, easier adaptation for tricky to scan materials and improvements in text quality. Hopefully the work done during my internship on OCR approaches in L&UC and the wider sector is useful in directing how we approach text extraction and the presentation of our text materials, especially as we make the move to our new digital collctions platform.

As part of the internship, I have designed a workshop and learning resource on hands-on text extraction for the CDCS. More details for the workshop to follow via their events page as we move into the 2023/24 academic year.

This internship with CHDS and CDCS has given me an opportunity to work with two wonderful teams across the university. It has been highly valuable in gaining industry experience with the Library and University Collections and investigating current trends in text extraction technology use across the cultural heritage sector more broadly. My work with CDCS in creating teaching and training resources in the form of workshops and asynchronous learning resources has also been highly valuable and I hope to bring these research and critical pedagogy skills to my personal research and wider teaching at the university.

By Ash Charlton, Optical Character Recognition Intern and second-year PhD student based in History, Classics and Archaeology.

A further blog from Ash about this internship can be found here https://blogs.ed.ac.uk/cdcs/2023/08/14/from-image-to-text-experience-of-an-optical-character-recognition-intern/

References

Centre for Data, Culture & Society. ‘Managing Digitised Documents Training Pathway’. (2022), https://www.cdcs.ed.ac.uk/training/training-pathways/managing-digitised-documents-pathway.

Olson, Leanne, and Veronica Berry. ‘Digitization Decisions: Comparing OCR Software for Librarian and Archivist Use’. The Code4Lib Journal, no. 52 (22 September 2021), https://journal.code4lib.org/articles/16132.

Be First to Comment