Background

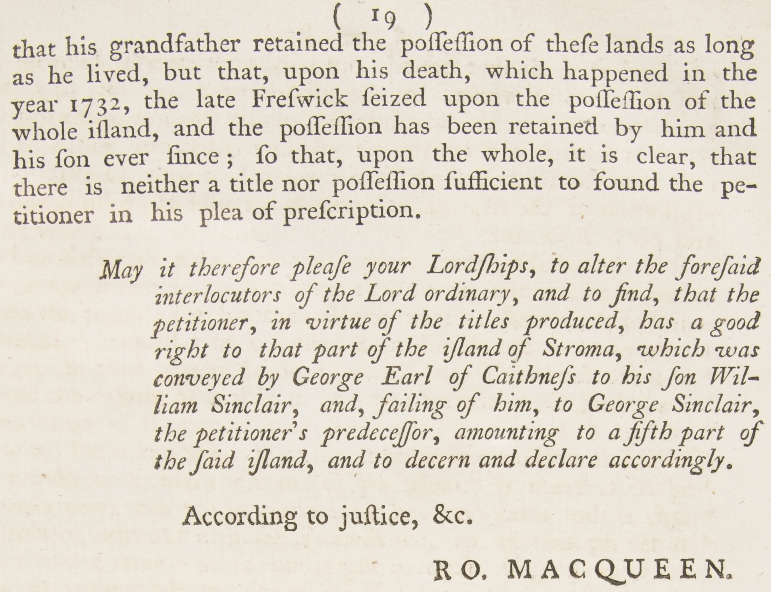

For historical collections digitisation projects inside the library, we are increasingly looking to provide OCR transcriptions of the documents alongside the digital images. In many cases, this can enhance the usability of the images significantly. For example, the volumes in the Session Papers Project are large and often without index, making locating specific text inside difficult (see the previous blog post about this challenge here: http://libraryblogs.is.ed.ac.uk/librarylabs/2017/06/23/automated-item-data-extraction-from-old-manuscripts/) unless one is in possession of copious amounts of free time and dedication.

Our implementation of IIIF as the primary delivery method for digital images at Edinburgh (some highlights of our IIIF collections at the bottom of Scott’s blog here: http://libraryblogs.is.ed.ac.uk/librarylabs/2018/12/13/edinburgh-hosts-international-iiif-event/) opens up a vector for not just providing the OCR text alongside the images, but also to enable native searching within the volume images inside an IIIF viewer such as UniversalViewer or Mirador.

IIIF Search

Currently searching is usually performed before and outside of the viewing experience, with the chosen result then loaded in a viewer. Searching within a volume therefore offers different possibilities for the end-user during their journies across the collections. This is achieved by using a service that is capable of providing the IIIF Search API.

So far, not many such services exist in the open source world, with the IIIF Awesome list having just one entry under Content Search Services: NCSU Libraries’ Ocracoke project, which is a Rails-based full workflow solution that can also process and OCR the documents prior to serving them via IIIF. Whilst other institutions do provide IIIF Search on their holdings, these implementations can be an integral part of their digital delivery stack and not easily seperable for release, internal only projects, instances of Ocracoke, etc.

As the OCR here at Edinburgh falls under a different part of our workflow (of which more in a future blogpost) and we are primarily working with PHP and Python, I decided to implement a simple Python service capable of supporting the Search API. The project is written using Flask, a lightweight Python web framework and backed by Apache Solr to provide the text-searching. A simple service needed a simple name, and so Whiiif (Word Highlighting for IIIF) was born.

Whiiif v1

Initially, I adopted the model used by Ocracoke: indexing the text of each whole page in Solr, and using an array of word->co-ordinate mappings for each page image. When a search is made in Solr, each document is returned using the native Highlighting feature of Solr, which returns a fragment of text, with the matching words bracketed by <em> tags.

The word-co-ordinate mappings for each page are extracted from the ALTO-XML generated by the Tesseract OCR process and stored in Solr as JSON, alongside the raw text. Producing the IIIF Search API response then becomes a case of extracting the matched words from the Solr highlight result, popping the co-ordinates for each word, and generating the response JSON for the client. The initial version of Whiiif using this approach can be found on the Whiiif Github repo at commit af8a903.

This version was deployed and during testing raised issues when handling some of the documents in our collection:

- Text-dense images, such as the Session Papers volumes, tended to have multiple instances of individual words on a page. Whilst this was not a problem for single-term searches (all instances would be found and the co-ordinates loaded), it caused a problem for phrase-based searching, where a word from the phrase could appear elsewhere on the page, before the match for the phrase, leading to incorrect word co-ordinates being retrieved from the array.

- Some words were modified by Solr’s language processing during the ingest process, meaning matches were being returned for which the corresponding co-ordinates (generated from the pre-processed, raw text) could not be found.

There were approaches to solving these problems, such as forcing Solr to return the entire page text via the Highlighter, so that the correct instance of repeated words could be ascertained. However, this led to a significant increase in the processing time required to generate the response for each hit, as well as greater resource requirements for Solr and I decided to try a different approach.

Whiiif v2

For the second iteration of Whiiif, I decided to investigate how feasible it would be to have Solr return the matching fragment from the ALTO-XML document, which would mean having the co-ordinates for the hits already in the Solr response. This ran into difficulties, as Solr is designed for working with text, and will not easily index or search an XML document, in fact usually stripping all the XML data (that we wanted to try and preserve) by using the HTMLStripCharFilter during the indexing process. Even with the filtering removed from the processing chain, basic abilities such as phrase searching were lost due to the format of the text being searched being “word<xml fragment>word<xml fragment>word<xml fragment>…”, and false hits for words appearing inside the ALTO-XML format such as “page”, “line”, “word”, etc.

Via the IIIF Slack, I was pointed towards the work of Johannes Baiter and the MDZ Digital Library team at the Bavarian State Library, who are developing a Solr plugin to resolve these various issues (available at https://github.com/dbmdz/solr-ocrhighlighting). I reworked the Solr controller for Whiiif to use the functionality of this plugin, keeping the work already done to provide IIIF Search API responses.

Following a couple of weeks of testing, and some very useful collaborative bug-fixing work with Johannes (primarily fixing some regexp bugs and improving the handling of ALTO files) and thanks to his speedy implementation of a feature request, Whiiif v2 was moved into internal production for some in-development project websites.

I then implemented a secondary feature in Whiiif: the ability to search across a collection as a whole, and have document hits, with snippets of page images returned (complete with visual highlighting), to complement the existing “Search Within” functionality of the IIIF Search API. This feature is also powered by the OCR Highlighting plugin, but returns a custom JSON format (although similar to the IIIF Search response format), allowing the front end controller of a collections site to customise the display of results to fit each individual site design.

The version of Whiiif with these capabilities is currently available on the “withplugin” branch of the Whiiif github repo, although this is still in heavy development and will become the master branch in the future when it is a bit tidier!

Next Steps

The next steps with the Whiiif experiment are to prepare a formal release of Whiiif v2, with updated documentation, install instructions and full unit-test coverage, keep an eye out here or on the github repo for news. In the meantime, please feel free to clone the repo and experiment. Issues and PRs always welcome and you can also contact me on the IIIF slack (as mbennett) or via email: mike.bennett@ed.ac.uk.

I’d love to hear from anyone playing around with Whiiif, or suggestions for other features. Experimental support for the “hits” property of IIIF Search v1 will arrive shortly, along with some updates to make use of the latest features of the Solr plugin.

Until next time 🙂

Mike